SparseGPT (ICML 2023)

GPT family models can be pruned to at least 50% sparsity in one-shot, without any retraining, at minimal loss of accuracy.

Approximation

Deep models are fundamentally composed of matrices.

Therefore, they can be compressed by applying matrix compression (low-rank approximation) techniques, such as truncated Singular Value Decomposition (SVD):

where

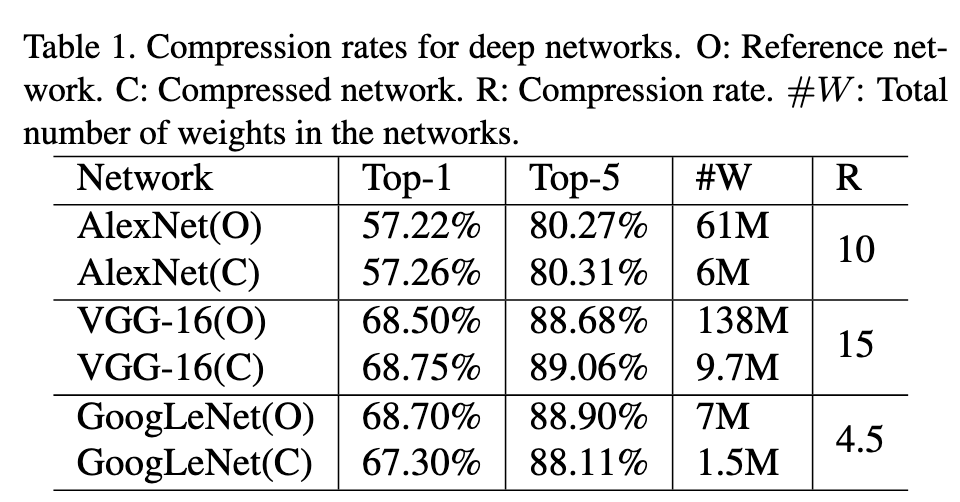

Standard SVD (NIPS 2014)

Speed up layers in a CNN by a factor 2 − 13×, with negligible loss of performance:

Low rank + Sparse (CVPR 2017)

Experiments:

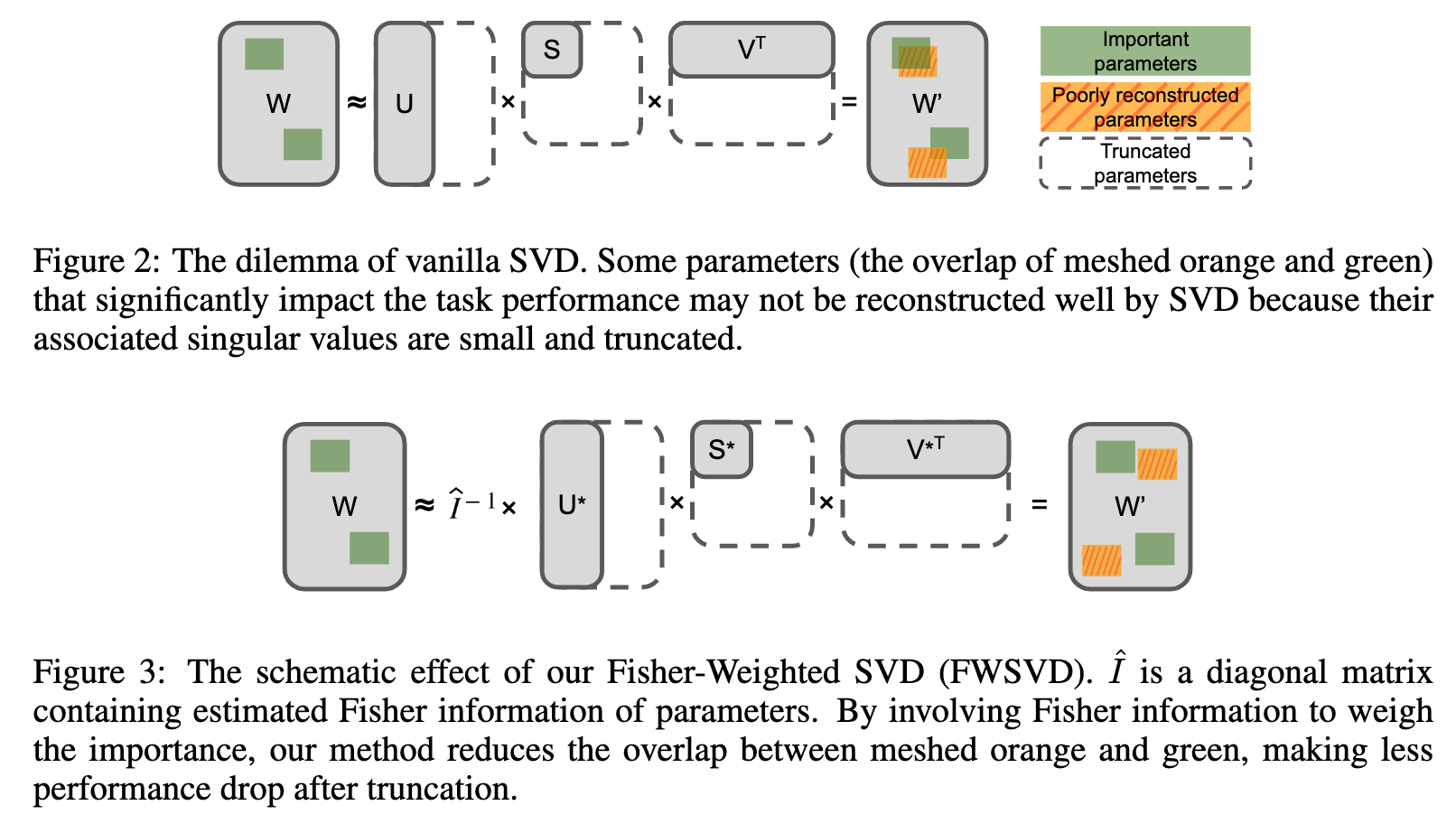

FWSVD (ICLR 2022)

FWSVD cont'd

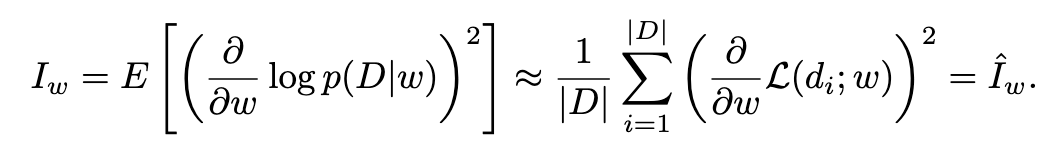

Fishier imformation matrix:

Experiments:

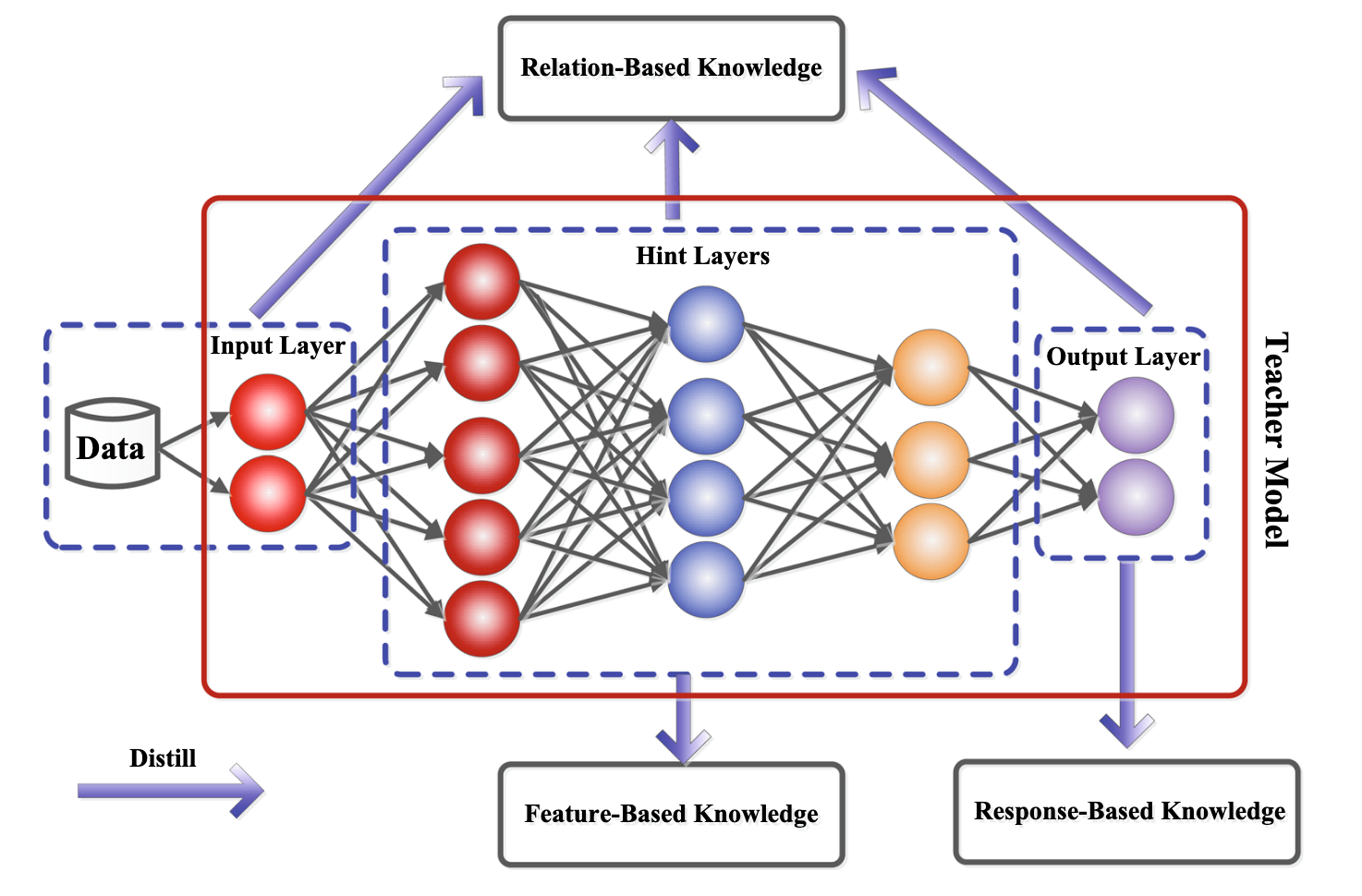

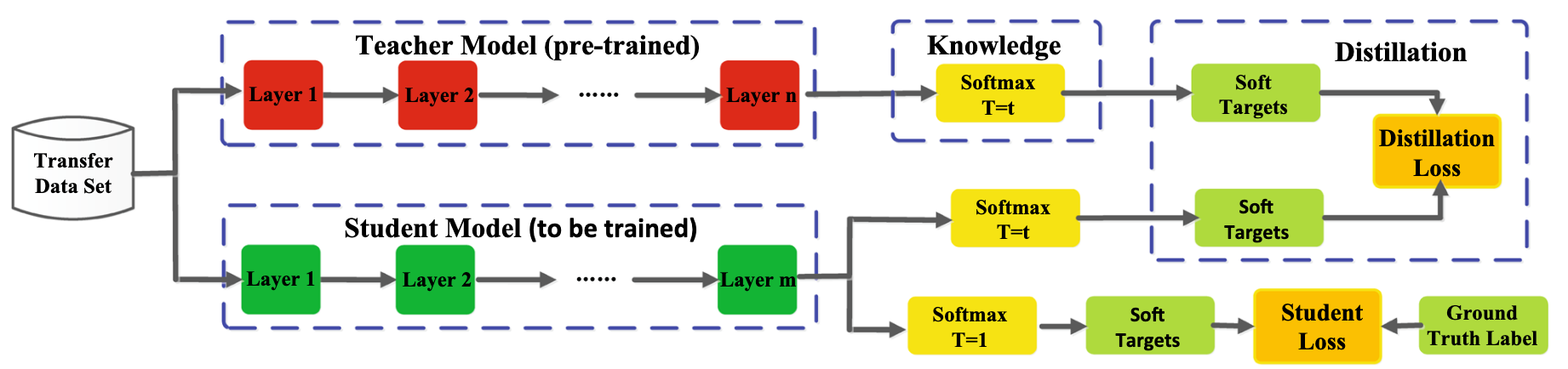

Knowledge Distillation

KD was first proposed by Hinton in NIPS 2014, Deep Learning Workshop. The prototype of KD can be traced back to KDD 2006.

Why KD works?

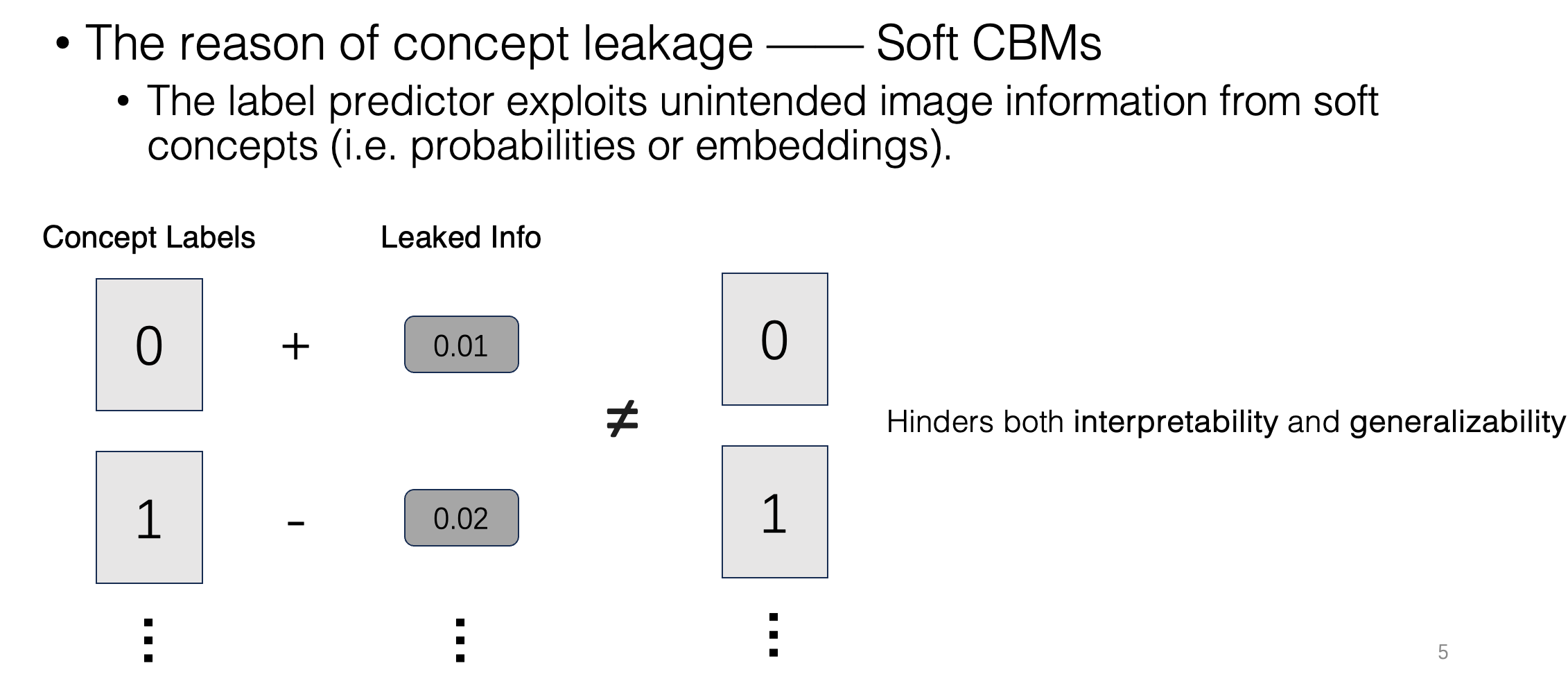

Information Leakage!

Adapted fron yibo's slides.

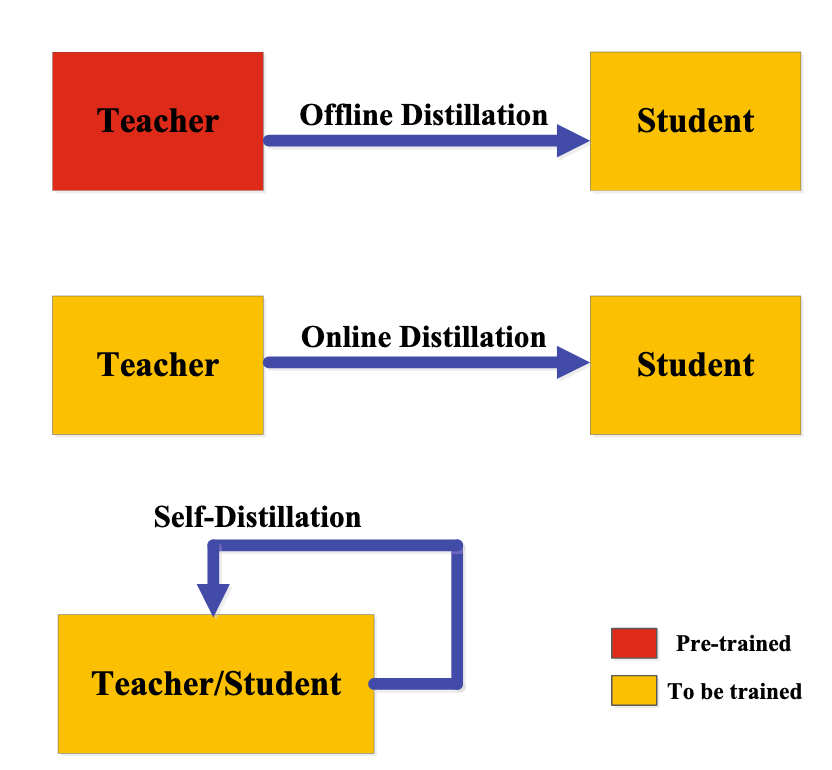

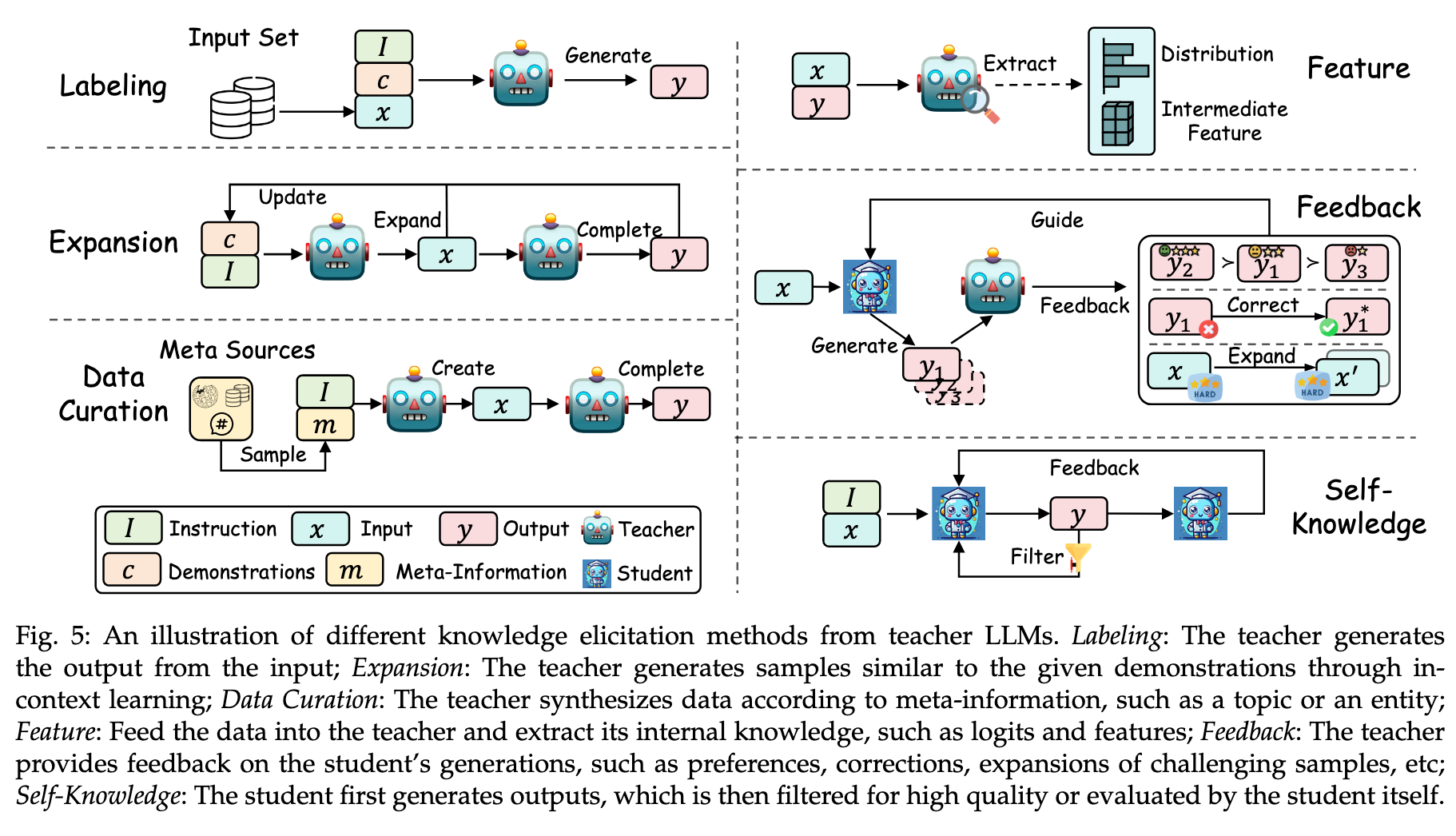

KD schemes

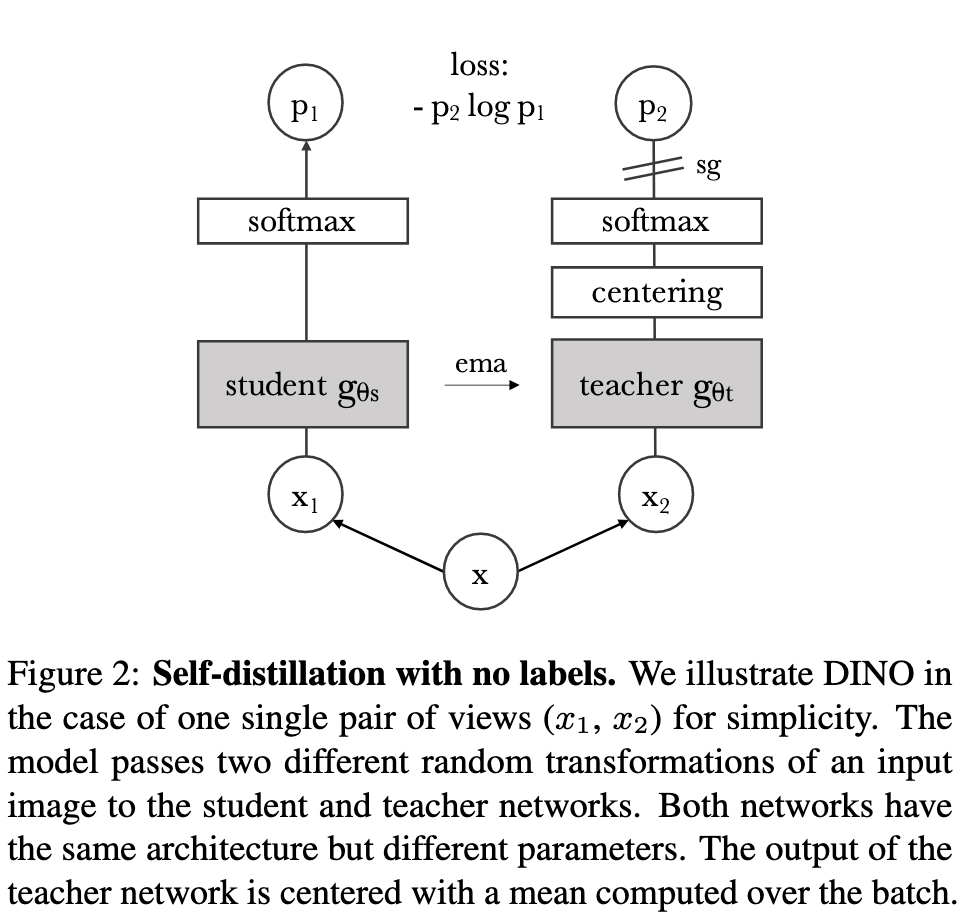

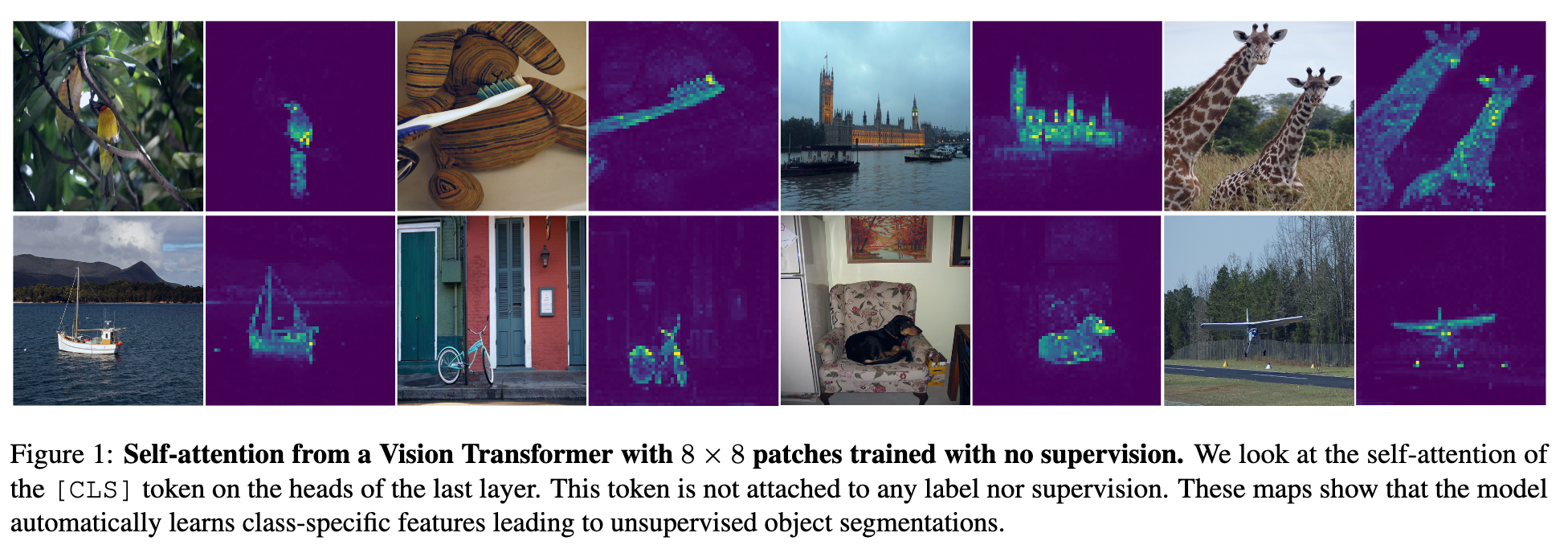

DINO (ICCV 2021)

DINO cont'd

KD in LLM era

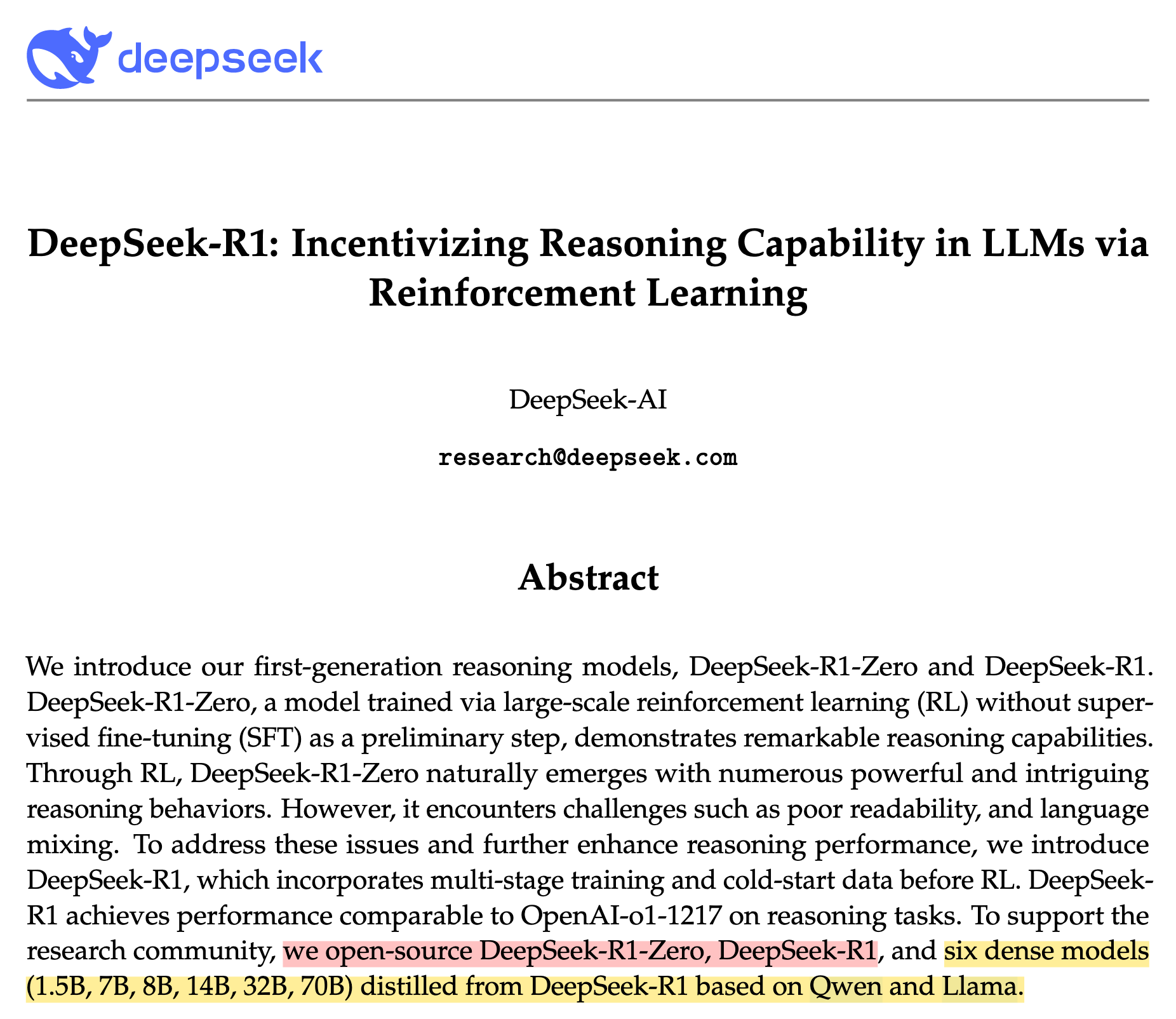

Deepseek R1 (arXiv 2025)

DeepSeek-R1-Distill-Qwen

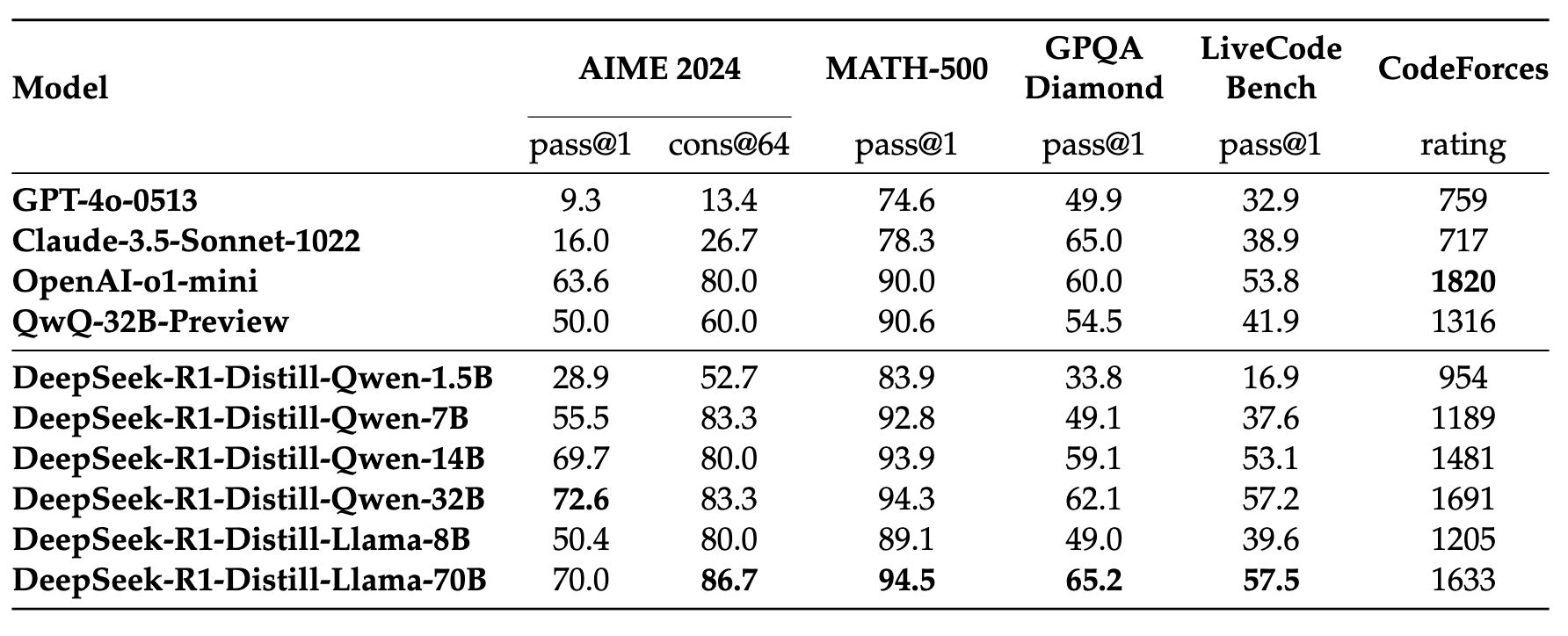

They use DeepSeek-R1 as the teacher model to generate 800K training samples, and fine-tune several small dense models. The results are promising:

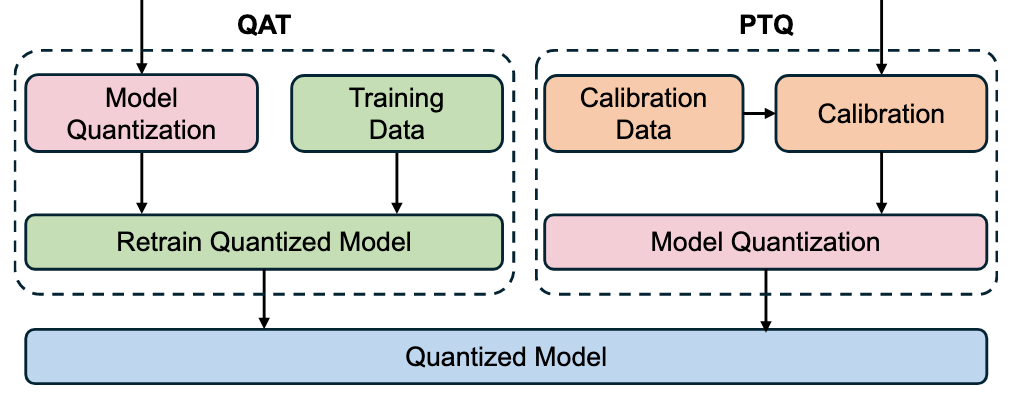

Quantization

The Key idea is mapping the floating-point weights and/or activation values in the model to low-precision representations, such as integers. (Quantization for DNN: A Survey)

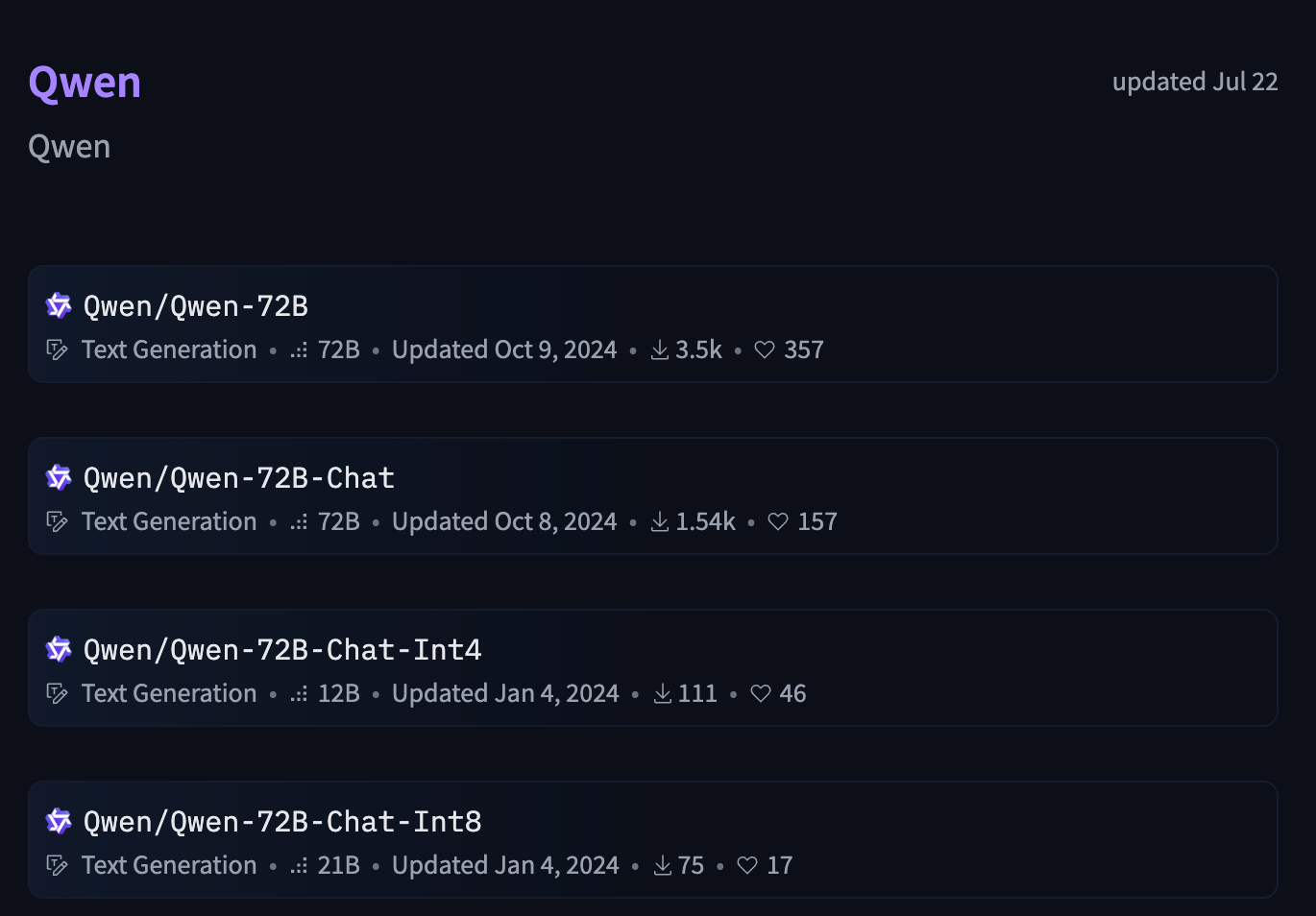

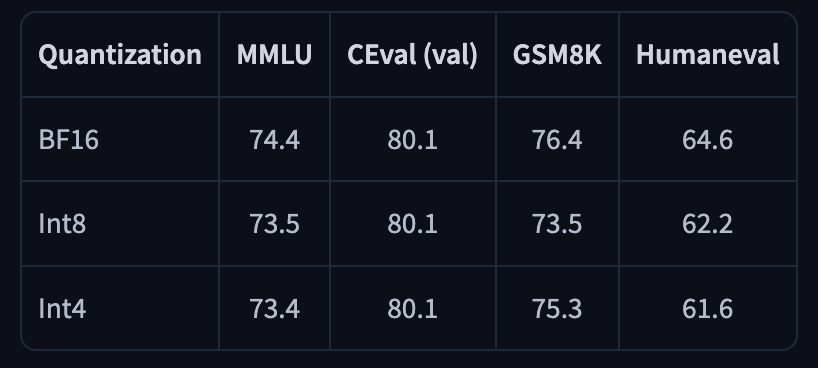

QWEN Quantization

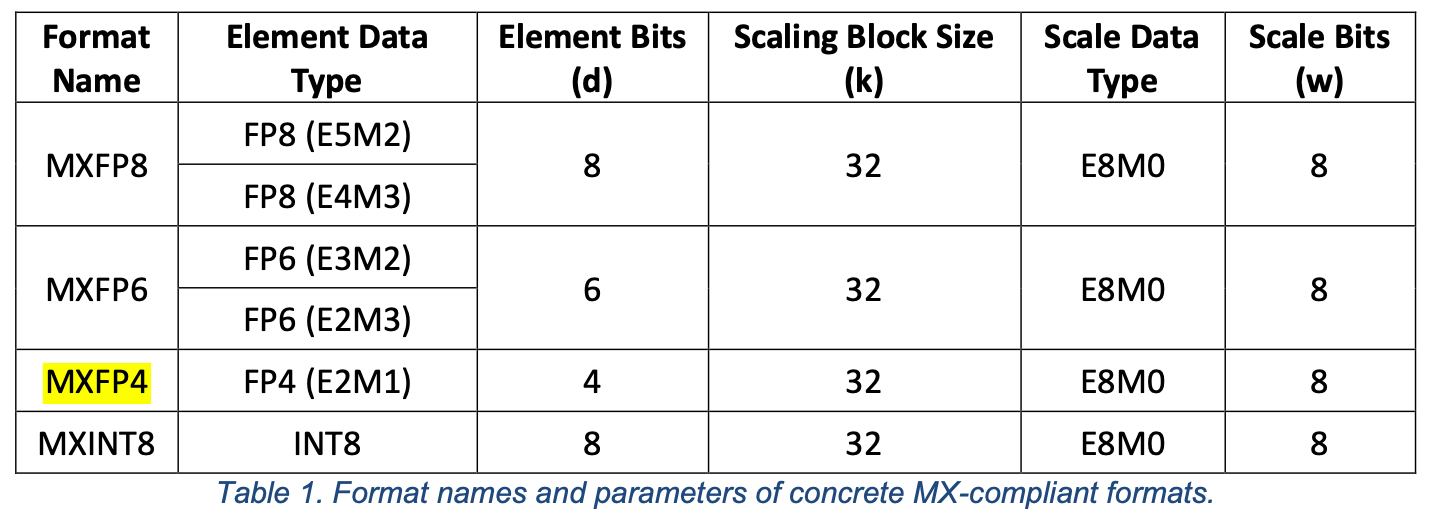

gpt-oss Quantization

gpt-oss-20b can run on systems with as little as 16GB memory! The magic comes from MXFP4 a new type of Block floating point.

THANKS